In a previous article, we measured how calibrated Chat GPT 4 was at providing a 90% confidence interval. During the experiment, after explaining what is meant by a 90% confidence interval, Chat GPT would provide a stated 90% interval that would only contain the actual answer 60% of the time. For comparison, on average humans’ performance is at 52% (This metric is slightly updated due to a larger sample size since the previous article was written). Thankfully, providing calibrated probability estimates is not some innate talent determined by genetics nor a skill bestowed upon people by the Gods of statistics. It is a skill that can be taught and learned.

Calibration Training: Understanding One’s Own Uncertainty

HDR has built upon decades of research in this area through the works of Nobel Prize winner Daniel Kahneman and political scientist Phillip Tetlock, to build the most comprehensive online calibration training platform available. Through a series of tests and training videos, humans learn how under or overconfident they are when giving probabilistic estimates and strategies that help them give more accurate probability assessments. When used correctly these strategies help humans translate their uncertainty into quantitative terms.

We put Chat GPT 4 through a similar process. Using 62 events that occurred after its data cut-off point, we went through several rounds of forecasting with Chat GPT where we asked it to provide 90% confidence interval estimates on topics such as sports, economics, politics, and pop culture. We then followed up by teaching it the same subjective forecasting strategies we teach humans in our training.

Below are the results of teaching these methods to humans and Chat GPT and recording their performance. For humans, these strategies were taught sequentially in which humans received a series of 6 tests and had their performance recorded. For Chat GPT, two sessions were created in which the temperature was set to 0 to decrease “creativity” in responses or as some might describe it “noise”. One was given a simple explanation of 90% confidence intervals and asked to forecast 62 events, the other was asked to apply the strategies mentioned above when forecasting the same 62 events.

| Initial Performance | After Training and Strategies | |

| Humans | 52% (10K intervals, 1001 humans) | 78% (52K intervals, 1001 humans) |

| Chat GPT 4 | 64.5% (62 intervals, 1 session) | 58% (62 intervals, 1 session) |

When it comes to humans, teaching these strategies significantly improves calibration. While still not perfectly calibrated to a 90% level, after going through calibration training their overconfidence is greatly diminished. It is worth noting, that the average for humans is brought down by outliers. Essentially individuals, whom we suspect based on their recorded time to completion, quickly answer questions without reading them.

Chat GPT on the other hand, struggles to use these strategies effectively and gives slightly worse estimates. A closer examination reveals that the version that was taught the strategies starts with a much more narrow range, then sequentially widens the range, by small amounts on both ends. It did not discriminate between different questions and would modify its range by similar amounts for each forecast. The result is a range with a width closer to that of the other session. Fortunately, there is another way of calibrating Chat GPT.

Calibration Training Hard Calculated Adjustment

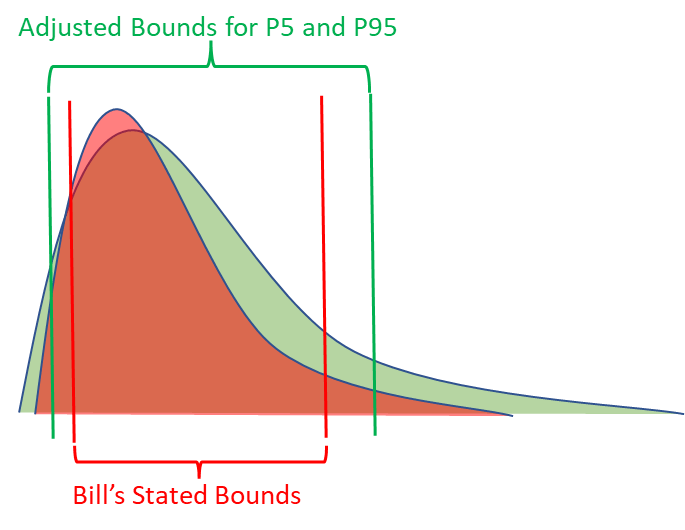

Anytime a human goes through calibration training, we know how to get calibrated 90% confidence intervals from them, even if they perform poorly during the calibration process. If Bill consistently gets 80% of the answers between his stated bounds, we know what metrics should be applied to his stated bounds in order to turn them into calibrated P5s and P95s. In other words, we know how to adjust for Bill’s overconfidence.

The exact same process can be applied to Chat GPT or other language models. To test this, we divided the set of 62 forecasts into a train and test set, with 31 forecasts in each. We calculated what the average adjustment would need to be for both the lower and upper bounds to be 90% calibrated for only the training set of questions. Then applied these adjustments to the test set of questions.

| Initial Stated Bounds | After Applying Adjustment | |

| Train Set | 68% (31 intervals) | 90.3% |

| Test Set | 61% (31 intervals) | 87.1% |

(Adjustments are calculated by normalizing the data on a scale of 0 to 1 with 0 = stated LB and 1 = stated UB)

This process was repeated 1000 times with different random samplings for the train and test data sets. After applying the adjustments developed from the training data sets, the average percent correct for the test data set bounds was 89.3%, which is nearly perfectly calibrated.

Practical Applications

Now that we have a method to obtain calibrated 90% confidence intervals from Chat GPT, can we use it to replace analysts’ subjective judgment when making decisions? Not exactly. The subjective confidence intervals given by Chat GPT should be viewed as initial baseline estimates. While calibrated to a 90% level, the intervals are much wider than what we would expect experts to provide. The information value Chat GPT has on a certain forecast is less than what we would expect from an expert on that topic.

For example, one of the forecasting questions was estimating Microsoft’s total 2022 revenue. After applying the adjustments to calibrate it, the output was a very wide range of $87 – 369 billion. I’m not a financial analyst with a focus on the tech industry, but I imagine obtaining a similar calibrated subjective forecast from financial analysts in January 2022 (Chat GPT’s data cut-off date) would result in a much more narrow range.

| Adjusted LB (P5) | Adjusted UB (P95) | Actual Answer | |

| Microsoft Total 2022 Revenue | $86.7 Billion | $379.2 Billion | $198.3 Billion |

The advantage Chat GPT and other generative AIs have is their speed and cost. In many models HDR builds for clients to help them make multi-million or even billion-dollar decisions, we’ll have a list of hundreds of variables that our client knows have an impact on the decision, but they are unsure how to quantitatively define them or who they should get to estimate them. It can take weeks to obtain estimates from experts or to collect data from other departments, while Chat GPT will take less than 5 minutes.

The key usefulness of starting with a calibrated baseline estimate is the implications of calculating the expected value of perfect information (EVPI). In every model we build, we calculate what the EVPI is for every variable. The EVPI is a monetized sensitivity analysis that computes how valuable it would be to eliminate uncertainty for a certain variable. It allows the analysts to pinpoint exactly how much effort they should spend analyzing certain variables. But this only works if there are initial calibrated estimates in the model.

In a follow-up article, I will be reviewing how HDR is incorporating Chat GPT in its decision models. This will include how we are utilizing it to estimate 90% confidence intervals within the model, how it can be prompted to build cashflow statements, and the implications of auto-generated EVPIs for follow-up analysis.

NOTE: Open AI released a major update for Chat GPT on November 6th. During this update, the data cut-off date was changed to April 2023. All interactions with Chat GPT mentioned in this article occurred in September and October of this year when the data-cutoff point was still January 2022 for Chat GPT 4.