Background

As a senior quantitative analyst at Hubbard Decision Research, I spend a significant portion of my day creating Monte Carlo simulations to analyze complex investment problems. The process involves decomposing the problem into relevant variables, quantifying uncertainty, building a dynamic cash flow statement, and generating thousands of simulations. Using our Excel-based risk-return analysis (RRA) template, this process can take anywhere from 30 minutes to 6 hours, depending on the complexity of the problem and my familiarity with the topic.

cash flow statement, and generating thousands of simulations. Using our Excel-based risk-return analysis (RRA) template, this process can take anywhere from 30 minutes to 6 hours, depending on the complexity of the problem and my familiarity with the topic.

Utilizing LLMs in Excel

Recently, I explored the possibility of leveraging large language models (LLMs) to automate the initial analysis using our RRA template. By connecting to ChatGPT via an API, I provided the LLM with a description of the investment problem and an explanation of how to use the template. I then asked it to generate Python code that would populate the template with values and formulas to complete the initial analysis.

To test this approach, I used several simple investment problems, such as evaluating real estate as a rental property investment. The LLM successfully decomposed the problem into relevant variables, including some that might not have been obvious to a non-expert, such as annual rent increases and renovation costs. It then defined its uncertainty by estimating probability distributions for each variable.

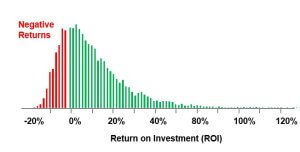

Our previous calibration testing on LLMs like ChatGPT-4 and Claude Opus has shown that while these models can provide quantitative estimates for probability distributions, they tend to be overconfident. To calibrate these estimates, we can measure their overconfidence and adjust their estimates accordingly. For example, if an LLM provides a 90% confidence interval for a variable that only contains the true value 60% of the time, we know how much to widen the interval to achieve the desired level of calibration. The LLMs then used the simulated values for these variables, automatically generated based on the defined probability distributions, to create a dynamic cash flow statement with a calculated NPV.

LLMs Make Mistakes in Excel Just Like Humans

Upon manual review, I discovered that the LLM had made some mistakes in the cash flow statement, such as using the wrong management fees formula, causing the investment to look much worse than it actually should be. Having audited countless cashflow models made by coworkers and clients alike, these errors seemed eerily similar to those a human might make.

Testing different investment problems and LLMs yielded similar results. The LLMs consistently decomposed the problems into logical components and provided reasonable, albeit overconfident, estimates for the variables. However, they often made at least one mistake in the cash flow statement, ranging from incorrect signs (+/-) to misunderstanding the relationship between variables.

Viewing LLMs as Very Fast Interns

Despite these limitations, I found that using LLMs can significantly improve my productivity when starting to analyze any investment scenario. The main benefit is speed, as an LLM can create an initial model in just a few minutes. Rather than spending hours researching the most important aspects of an investment problem, I can delegate this task to an LLM. It’s as if these LLMs are my very fast but error-prone interns.

As LLMs continue to develop, their accuracy will only improve. Just like how senior analyst/manager oversight is crucial for checking the quality of work of interns or junior analysts, human oversight will remain crucial to auditing any analysis conducted by LLMs. Analysts can integrate LLMs into their workflows by using them to generate initial models quickly but must always carefully review and check the models for any errors.