by Joey Beachum | Jun 29, 2019 | Commercial Real Estate, How To Measure Anything Blogs, News

Overview:

- Quantitative commercial real estate modeling is becoming more widespread, but is still limited in several crucial ways

- You may be measuring variables unlikely to improve the decision while ignoring more critical variables

- Some assessment methods can create more error than they remove

- A sound quantitative model can significantly boost your investment ROI

Quantitative commercial real estate modeling (CRE), once the former province of only the high-end CRE firms on the coasts, has become more widespread – for good reason. CRE is all about making good decisions about investments, after all, and research has repeatedly shown how even a basic statistical algorithm outperforms human judgment<fn>Dawes, R. M., Faust, D., & Meehl, P. E. (n.d.). Statistical Prediction versus Clinical Prediction: Improving What Works. Retrieved from http://meehl.umn.edu/sites/g/files/pua1696/f/155dfm1993_0.pdf</fn><fn>N. Nohria, W. Joyce, and B. Roberson, “What Really Works,” Harvard Business Review, July 2003</fn>.

Statistical modeling in CRE, though, is still limited, for a few different reasons, which we’ll cover below. Many of these limitations actually result in more error (one common misconception is merely having a model improves accuracy, but sadly that’s not the case). Even a few percentage points of error can result in significant losses. Any investor that has suffered from a bad investment knows all too well how that feels. So, through better quantitative modeling, we can decrease the chance of failure.

Here’s how to start.

The Usual Suspects: Common Variables Used Today

Variables are what you’re using to create probability estimates – and, really, any other estimate or calculation. If we can pick the right variables, and figure out the right way to measure them (more on that later), we can build a statistical model that has more accuracy and less error.

Most commercial real estate models – quantitative or otherwise – make use of the same general variables. The CCIM Institute, in its 1Q19 Commercial Real Estate Insights Report, discusses several, including:

- Employment and job growth

- Gross domestic product (GDP)

- Small business activity

- Stock market indexes

- Government bond yields

- Commodity prices

- Small business sentiment and confidence

- Capital spending

Data for these variables is readily available. For example, you can go to CalculatedRiskBlog.com and check out their Weekly Schedule for a list of all upcoming reports, like the Dallas Fed Survey of Manufacturing Activity, or the Durable Goods Orders report from the Census Bureau.

The problem, though, is twofold:

- Not all measurements matter equally, and some don’t matter at all.

- It’s difficult to gain a competitive advantage if you’re using the same data in the same way as everyone else.

Learning How to Measure What Matters in Commercial Real Estate

In How to Measure Anything: Finding the Value of Intangibles in Business, Doug Hubbard explains a key theme of the research and practical experience he and others have amassed over the decades: not everything you can measure matters.

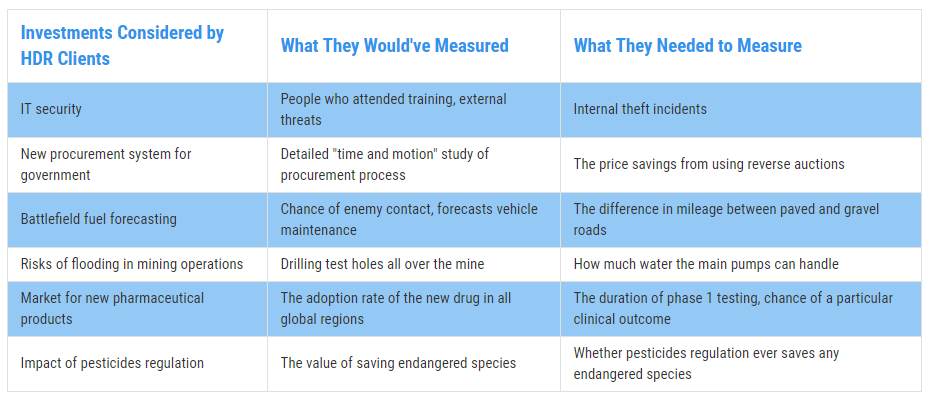

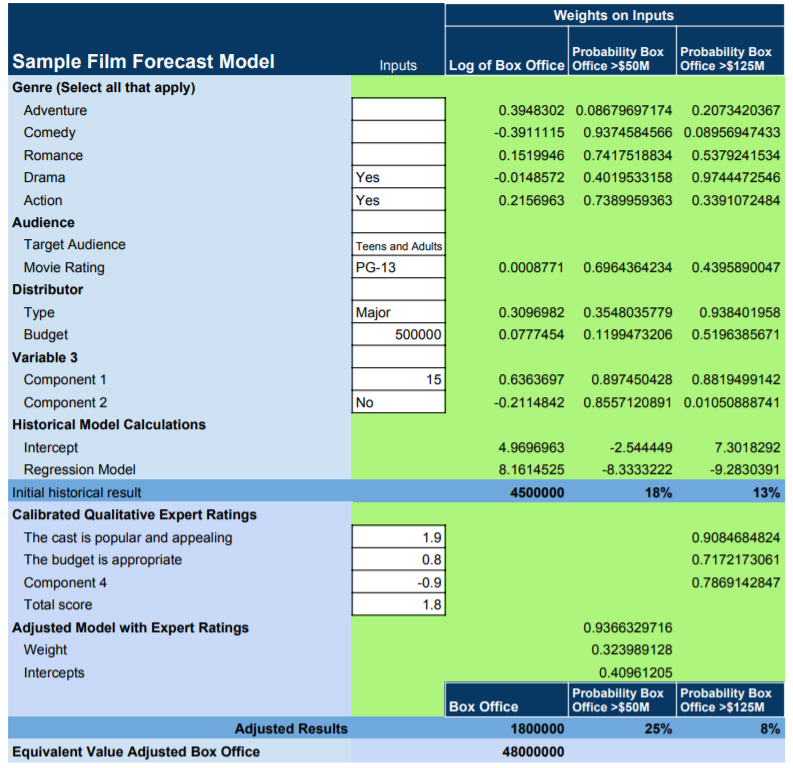

When we say “matters,” we’re basically saying that the variable has predictive power. For example, check out Figure 1. These are cases where the variables our clients were initially measuring had little to no predictive power compared to the variables we found to be more predictive. This is called measurement inversion.

Figure 1: Real Examples of Measurement Inversion

Figure 1: Real Examples of Measurement Inversion

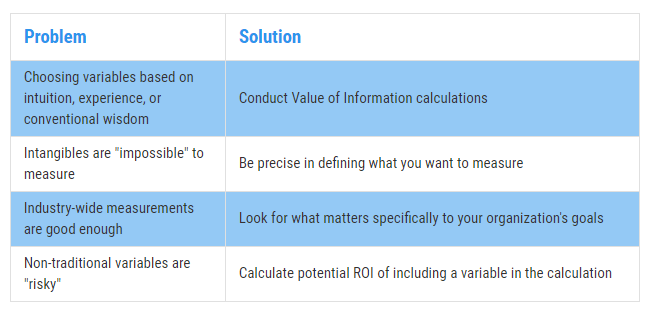

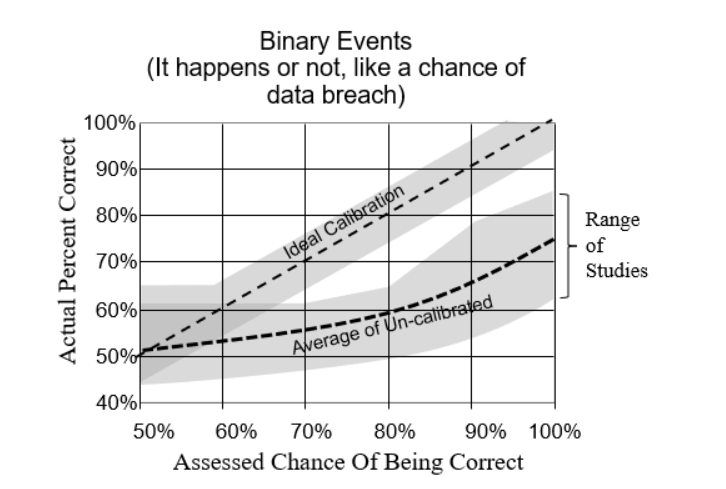

The same principle applies in CRE. Why does measurement inversion exist? There are a few reasons: variables are often chosen based on intuition/conventional wisdom/experience, not statistical analysis or testing; decision-makers often assume that industries are more monolithic than they really are when it comes to data and trends (i.e. all businesses are sufficiently similar that broad data is good enough); intangibles that should be measured are viewed as “impossible” to measure; and/or looking into other, “non-traditional” variables comes with risk that some aren’t willing to take. (See Figure 2 below.)

Figure 2: Solving the Measurement Inversion Problem

Figure 2: Solving the Measurement Inversion Problem

The best way to begin overcoming measurement inversion is to get precise with what you’re trying to measure. Why, for example, do CRE investors want to know about employment? Because if too many people in a given market don’t have jobs, then that affects vacancy rates for multi-family units and, indirectly, vacancy rates for office space. That’s pretty straightforward.

So, when we’re talking about employment, we’re really trying to measure vacancy rates. Investors really want to know the likelihood that vacancy rates will increase or decrease over a given time period, and by how much. Employment trends can start you down that path, but by itself isn’t not enough. You need more predictive power.

Picking and defining variables is where a well-built CRE quantitative model really shines. You can use data to test variables and tease out not only their predictive power in isolation (through decomposition and regression), but also discover relationships with multi-variate analysis. Then, you can incorporate simulations and start determining probability.

For example, research has shown<fn>Heinig, S., Nanda, A., & Tsolacos, S. (2016). Which Sentiment Indicators Matter? An Analysis of the European Commercial Real Estate Market. ICMA Centre, University of Reading</fn> that “sentiment,” or the overall mood or feeling of investors in a market, isn’t something that should be readily dismissed just because it’s hard to measure in any meaningful way. Traditional ways to measure market sentiment can be dramatically improved by incorporating tools that we’ve used in the past, like Google Trends. (Here’s a tool we use to demonstrate a more predictive “nowcast” of employment using publicly-available Google Trend information.)

To illustrate this, consider the following. We were engaged by a CRE firm located in New York City to develop quantitative models to help them make better recommendations to their clients in a field that is full of complexity and uncertainty. Long story short, they wanted to know something every CRE firm wants to know: what variables matter the most, and how can we measure them?

We conducted research and gathered estimates from CRE professionals involving over 100 variables. By conducting value of information calculations and Monte Carlo simulations, along with using other methods, we came to a conclusion that surprised our client but naturally didn’t surprise us: many of the variables had very little predictive power – and some had far more predictive power than anyone thought.

One of the latter variables wound up reducing uncertainty in price by 46% for up to a year in advance, meaning the firm could more accurately predict price changes – giving them a serious competitive advantage.

Knowing what to measure and what data to gather can give you a competitive advantage as well. However, one common source of data – inputs from subject-matter experts, agents, and analysts – is fraught with error if you’re not careful. Unfortunately, most organizations aren’t.

How to Convert Your Professional Estimates From a Weakness to a Strength

We mentioned earlier how algorithms can outperform human judgment. The reasons are numerous, and we talk about some of them in our free guide, Calibrated Probability Assessments: An Introduction.

The bottom line is that there are plenty of innate cognitive biases that even knowledgeable and experienced professionals fall victim to. These biases introduce potentially disastrous amounts of error that, when left uncorrected, can wreak havoc even with a sophisticated quantitative model. (In The Quants, Scott Patterson’s best-selling chronicle of quantitative wizards who helped engineer the 2008 collapse, the author explains how overly-optimistic, inaccurate, and at-times arrogant subjective estimates undermined the entire system – to disastrous results.)

The biggest threat is overconfidence, and unfortunately, the more experience a subject-matter expert has, the more overconfident he/she tends to be. It’s a catch-22 situation.

You need expert insight, though, so what do you do? First, understand that human judgments are like anything else: variables that need to be properly defined, measured, and incorporated into the model.

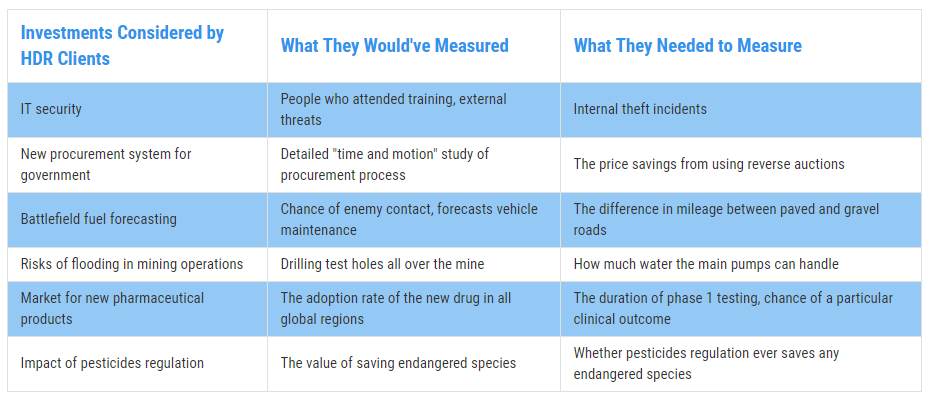

Second, these individuals need to be taught how to control for their innate biases and develop more accuracy with making probability assessments. In other words, they need to be calibrated.

Research has shown how calibration training often results in measurable improvements in accuracy and predictive power when it comes to probability assessments from humans. (And, at the end of the day, every decision is informed by probability assessments whether we realize it or not.) Thus, with calibration training, CRE analysts and experts can not only use their experience and wisdom, but quantify it and turn it into a more useful variable. (Click here for more information on Calibration Training.)

Including calibrated estimates can take one of the biggest weaknesses firms face and turn it into a key, valuable strength.

Putting It All Together: Producing an ROI-Boosting Commercial Real Estate Model

How do you overcome this challenge? Unfortunately, there’s no magic button or piece of software that you can buy off the shelf to do it for you. A well-built CRE model, incorporating the right measurements and a few basic statistical concepts based on probabilistic assessments, is what will improve your chances of generating more ROI – and avoiding costly pitfalls that routinely befall other firms.

The good news is that CRE investors don’t need an overly-complicated monster of a model to make better investment decisions. Over the years we’ve taught companies how incorporating just a few basic statistical methods can improve decision-making over what they were doing at the time. Calibrating experts, incorporating probabilities into the equation, and conducting simulations can, just by themselves, create meaningful improvements.

Eventually, a CRE firm should get to the point where it has a custom, fully-developed commercial real estate model built around its specific needs, like the model mentioned previously that we built for our NYC client.

There are a few different ways to get to that point, but the ultimate goal is to be able to deliver actionable insights, like “Investment A is 35% more likely than Investment B at achieving X% ROI over the next six months,” or something to that effect.

It just takes going beyond the usual suspects: ill-fitting variables, uncalibrated human judgment, and doing what everyone else is doing because that’s just how it’s done.

Contact Hubbard Decision Research

by Joey Beachum | Jun 18, 2019 | News, The Failure of Risk Management Blogs

Risk management methodology, until very recently, was based mostly on pseudo-quantitative tools like risk matrices. The use of these tools has actually introduced more error into decision-making than they removed, as research has shown, and organizations are steadily coming around to more scientific quantitative methods – like Applied Information Economics (AIE). AIE is prominently cited in a new piece in the ISACA Journal, the publication of ISACA, a nonprofit, independent association that advocates for professionals involved in information security, assurance, risk management and governance.

The feature piece doesn’t hide the lede. The author says, in the opening paragraph, what Doug has been preaching for years: that “rick matrices do not really work. Worse, they lead to a false sense of security.” This was one of the main themes Doug talks about in The Failure of Risk Management, the second edition of which that is due for publication this year. The article sums up several of the main reasons Doug and others have given as to why risk matrices don’t work, ranging from lacking clear definitions to a failure to assign meaningful probabilities and cognitive biases that lead to poor assessments of probability and risk.

After moving through explanations of various aspects of effective risk models – i.e. tools like decomposition, Monte Carlo simulations, and the like – the author of the piece concludes with a simple statement that sums up the gist of what Applied Information Economics is designed to do: “There are better alternatives to risk matrices, and, with a little time and effort, it is possible to manage risk using terminology and methods that everyone can, at least intuitively, understand.”

The entire piece can be found here. For an introduction into AIE and how our methods are based on sound scientific research and offer measurable improvement over other systems, check out our Intro to Applied Information Economics webinar.

by Joey Beachum | Jun 18, 2019 | How To Measure Anything Blogs, News

Many things seem impossible to measure – so-called “intangibles” like employee engagement, innovation, customer satisfaction, transparency, and more – but with the right mindset and approach, you can measure anything. That’s the lesson of Doug’s book How to Measure Anything: Finding the Value of Intangibles in Business, and that’s the focus of his public lecture at the University of Bonn in Bonn, Germany on June 28, 2019.

In this lecture, Doug will discuss:

- Three misconceptions that keep people from measuring what they should measure, and how to overcome them;

- Why some common “quantitative” methods – including many based on subjective expert judgment – are ineffective;

- How an organization can use practical statistical methods shown by scientific research to be more effective than anything else.

The lecture is hosted by the university’s Institute of Crop Science and Resource Conservation, which studies how to improve agriculture practices in Germany and around the world. Doug previously worked in this area when he helped the United Nations Environmental Program (UNEP) determine how to measure the impact of and modify restoration efforts in the Mongolian desert. You can view that report here. You can also view the official page for the lecture on the university’s website here.

by Joey Beachum | Jun 17, 2019 | How To Measure Anything in Cybersecurity Risk, News

In every industry, the risk of cyber attack is growing.

In 2015, a team of researchers forecasted that the maximum number of records that could be exposed in breaches – 200 million – would increase by 50% from then to 2020. According to the Identity Theft Resource Center, the number of records exposed in 2018 was nearly 447 million – well over 50%. By 2021, damages from cybersecurity breaches will cost organizations $6 trillion a year. In 2017, breaches cost global companies an average of $3.6 million, according to the Ponemon Institute.

It’s clear that this threat is sufficiently large to rank as one of an organization’s most prominent risks. To this end, corporations have entire cybersecurity risk programs in place to attempt to identify and mitigate as much risk as possible.

The foundation of accurate cybersecurity risk analysis begins with knowing what is out there. If you can’t identify the threats, you can’t assess their probabilities – and if you can’t assess their probabilities, your organization may be exposed by a critical vulnerability that won’t make itself known until it’s too late.

Cybersecurity threats may vary in specific from entity to entity, but in general, there are several common dangers that may be flying under the radar – and may be some you haven’t seen coming until now.

A Company’s Frontline Defense Isn’t Keeping Up the Pace

Technology is advancing at a more rapid rate than at any other point in human history: concepts such as cloud computing, machine learning, artificial intelligence, and Internet of Things (IoT) provide unprecedented advantages, but also introduce distinct vulnerabilities.

This rapid pace requires that cybersecurity technicians stay up to speed on the latest threats and mitigation techniques, but this often doesn’t occur. In a recent survey of IT professionals conducted by (ISC)^2, 43% indicated that their organization fails to provide adequate ongoing security training.

Unfortunately, leadership in companies large and small have traditionally been reluctant to invest in security training. The primary reason is mainly psychological; decision-makers tend to view IT investment in general as an expense that should be limited as much as possible, rather than as a hedge against the greater cost of failure.

Part of the reason why this phenomenon exists is due to how budgets are structured. IT investment adds to operational cost. Decision-makers – especially those in the MBA generation – are trained to reduce operational costs as much as possible in the name of greater efficiency and higher short-term profit margins. This mindset can cause executives to not look at IT investments as what they are: the price of mitigating greater costs.

Increases in IT security budgets also aren’t pegged to the increase of a company’s exposure, which isn’t static but fluctuates (and, in today’s world of increasingly-sophisticated threats, often increases).

The truth is, of course, that investing in cybersecurity may not make a company more money – a myopic view – it can keep a company from losing more money.

Another threat closely related to the above is how decision-makers tend to view probabilities. Research shows that decision-makers often overlook the potential cost of a negative event – like a data breach – in favor of its relatively-low probability (i.e. “It hasn’t happened before, or it probably won’t happen, so we don’t have to worry as much about it.”). These are called tail risks, risks that have disproportionate costs to their probabilities. In other words, they may not happen as frequently, but when they do, the consequences are often catastrophic.

There’s also a significant shortfall in cybersecurity professionals that is inducing more vulnerability into organizations that already are stressed to their maximum capacity. Across the globe, there are 2.93 million fewer workers than are needed. In North America, that number, in 2018, was just under 500,000.

Nearly a quarter of respondents in the aforementioned (ISC)^2 survey said they had a “significant shortage” in cybersecurity staff. Only 3% said they had “too many” workers. Overall, 63% of companies reported having fewer workers than they needed. And 59% said they were at “extreme or moderate risk” due to their shortage. (Yet, 43% said they were either going to not hire any new workers or even decrease the number of security personnel on their rosters.)

A combination of less training, inadequate budgets, and fewer workers all contribute to a major threat to security that many organizations fail to appreciate.

Threats from Beyond Borders Are Difficult to Assess – and Are Increasing

Many cybersecurity professionals correctly identify autonomous individuals and entities as a key threat – the stereotypical hacker or a team within a criminal organization. However, one significant and overlooked vector is the threat posed by other nations and foreign non-state actors.

China, Russia, and Iran are at the forefront of countries that leverage hacking in a state-endorsed effort to gain access to proprietary technology and data. In 2017, China implemented a law requiring any firm operating in China to store their data on servers physically located within the country, creating a significant risk of the information being accessed inappropriately. China also takes advantage of academic partnerships that American universities enjoy with numerous companies to access confidential data, tainting what should be the purest area of technological sharing and innovation.

In recent years, Russia has noticeably increased its demand to review the source code for any foreign technology being sold or used within its borders. Finally, Iran contains numerous dedicated hacking groups with defined targets, such as the aerospace industry, energy companies, and defense firms.

More disturbing than the source of these attacks are the pathways they use to acquire this data – including one surprising method. A Romanian source recently revealed to Business Insider that when large companies sell outdated (but still functional) servers, the information isn’t always completely wiped. The source in question explained that he’d been able to procure an almost complete database from a Dutch public health insurance system; all of the codes, software, and procedures for traffic lights and railway signaling for several European cities; and an up-to-date employee directory (including access codes and passwords) for a major European aerospace manufacturer from salvaged equipment.

A common technique used by foreign actors in general, whether private or state-sponsored, is to use legitimate front companies to purchase or partner with other businesses and exploit the access afforded by these relationships. Software supply chain attacks have significantly increased in recent years, with seven significant events occurring in 2017, compared to only four between 2014 and 2016. FedEx and Maersk suffered approximately $600 million in losses from a single such attack.

The threat from across borders can be particularly difficult to assess due to distance, language barriers, a lack of knowledge about the local environment, and other factors. It is, nonetheless, something that has to be taken into consideration by a cybersecurity program – and yet often isn’t.

The Biggest Under-the-Radar Risk Is How You Assess Risks

While identifying risks is the foundation of cybersecurity, appropriately analyzing them is arguably more important. Many commonly used methods of risk analysis can actually obscure and increase risk rather than expose and mitigate it. In other words, many organizations are vulnerable to the biggest under-the-radar threat of them all: a broken risk management system.

Qualitative and pseudo-quantitative methods often create what Doug Hubbard calls the “analysis placebo effect,”(add footnote) where tactics are perceived to be improvements but offer no tangible benefits. This can increase vulnerabilities by instilling a false sense of confidence, and psychologists have shown that this can occur even when the tactics themselves increase estimate errors. Two months before a massive cyber attack rocked Atlanta in 2018, a risk assessment revealed various vulnerabilities, but the fix actions to address these fell short of actually resolving the city’s exposure—although officials were confident they had adequately addressed the risk.

Techniques such as heat maps, risk matrices, and soft scoring often fail to inform an organization regarding which risks they should address and how they should do so. Experts indicate that “risk matrices should not be used for decisions of any consequence,<fn>Thomas, Philip & Bratvold, Reidar & Bickel, J. (2013). The Risk of Using Risk Matrices. SPE Economics & Management. 6. 10.2118/166269-MS.</fn>” and they can be even “worse than useless.<fn>Anthony (Tony) Cox, L. (2008), What’s Wrong with Risk Matrices?. Risk Analysis, 28: 497-512. doi:10.1111/j.1539-6924.2008.01030.x</fn>” Studies have repeatedly shown, in numerous venues, that collecting too much data, collaborating beyond a certain point, and relying on structured, qualitative decision analyses consistently produce worse results than if these actions had been avoided.

It’s easy to assume that many aspects of cybersecurity are inestimable, but we believe that anything can be measured. If it can be measured, it can be assessed and addressed appropriately. A quantitative model that circumvents overconfidence commonly seen with qualitative measures, uses properly-calibrated expert assessments, knows what information is most valuable and what isn’t, and is built on a comprehensive, multi-disciplinary framework can provide actionable data to guide appropriate decisions.

Bottom line: not all cybersecurity threats are readily apparent, and the most dangerous ones can easily be ones you underestimate, or don’t see coming at all. Knowing which factors to measure and how to quantify them can help you identify the most pressing vulnerabilities, which is the cornerstone of effective cybersecurity practices

For more information on how to create a more effective cybersecurity system based on quantitative methods, check out our How to Measure Anything in Cybersecurity Risk webinar.

by Joey Beachum | May 22, 2019 | How To Measure Anything Blogs, News

What follows is a tale that, for business people, reads like a Shakespearean tragedy – or a Stephen King horror novel. It starts with the recent history-setting success of Avengers: Endgame and the notion that an idea has value – and if you don’t see it or won’t realize it, someone else will.

The year is 1998. As explained in this Wall Street Journal piece, Sony Pictures wanted to buy the rights to produce Spider-Man movies. Marvel Entertainment, who owned the rights, needed cash because they had just came out of bankruptcy. So, Marvel essentially told Sony that not only could it have the rights to Spider-Man, it could have the rights to almost every Marvel character for the low-low sum of $25 million.

These Marvel characters? They included Iron Man, Thor, Black Panther, and others. You may recognize those names, unless you’ve sworn off entertainment altogether for the past decade.

Sony said “No thanks, we just want Spider-Man,” and only paid $10 million in cash.

Eleven years and 22 movies later, the Marvel Cinematic Universe (MCU) has grossed a staggering $19.9 billion (as of 4/30/2019) – and they’re not done making movies, with plenty more on the way.

Sony’s decision was an epically bad one, of course, but only in hindsight. There’s no guarantee that even if they had bought the rights that they would’ve had the same success. Besides, there’s no way they could’ve predicted just how valuable the franchise would turn out to be. After all, at the time, the cinematic prospects for many of the characters that were for sale were low, to say the least. Part of that is due to a decade-long slide in quality and popularity for Marvel in the 1990s that eventually lead to its bankruptcy.

Measuring value for an idea is impossible. You just can’t predict what movies – or books, or songs, or works of art, or ideas in general – will be successful…right?

Finding the Next Hit: Measuring the Potential Value of an Idea

One pervasive belief is that you can’t measure or quantify an intangible thing like an idea, like a movie. People believe that you can only quantify tangible things, and even then, it’s difficult to forecast what will happen.

Ideas, though, can be measured just like anything else. Can you put an exact number on an intangible concept, like whether or not a movie will be a success? No – but that’s not what measurement and quantification are, really.

At its most basic, measurement is just reducing the amount of uncertainty you have about something. You don’t have to put an exact number on a concept to be more certain about it. For example, Sony Pictures wasn’t certain how much a Spider-Man movie would make, but it was confident that the rights were worth more than $10 million.

One of the most successful superhero movies in the 1990’s – Batman Forever, starring Val Kilmer, Jim Carrey, and Tommy Lee Jones – raked in $336.5 million on a then-massive budget of $100 million.

If we’re Sony and we think Spider-Man is roughly as popular as Batman, we can reasonably guess that a Spider-Man movie could do almost as well. (Even a universally-panned superhero movie, Batman & Robin, grossed $238.2 million on a budget of $125 million.) We can do a quick-and-dirty proxy of popularity by comparing the total number of copies sold for each franchise.

Unfortunately there’s a huge gap in data for most comics between 1987 and the 2000’s. No matter. We can use the last year prior to 1998 in which there was industry data for both characters. Roughly 150,000 copies of Batman comics were sold in 1987, versus roughly 170,000 copies of Spider-Man.

Conclusion: it’s fair to say that Spider-Man, in 1998, was probably as popular as Batman was before Batman’s first release, the simply-named Batman in 1989 with Michael Keaton and a delightfully-twisted Jack Nicholson. Thus, Sony was making a good bet when it bought the rights to Spider-Man in 1998.

Uncertainty, then, can be reduced. The more you reduce uncertainty through measurement, the better the decision will be, all other things considered equal. You don’t need an exact number to make a decision; you just have to get close enough.

So how can we take back-of-the-envelope math to the next level and further reduce uncertainty about ideas?

Creating a Probabilistic Model for Intangible Ideas

Back-of-the-envelope is well and good if you want to take a crack at narrowing down your initial range of uncertainty. But if you want to further reduce uncertainty and increase the probability of making a good call, you’ll have to start calculating probability.

Normally, organizations like movie studios (and just about everyone else) turn to subject matter experts to assess the chances of something happening, or to evaluate the quality or value of something. These people often have years to decades of experience and have developed a habit of relying on their gut instinct when making decisions. Movie executives are no different.

Unfortunately, organizations often assume that expert judgment is the only real solution, or, if they concede the need for quantitative analysis, they often rely too much on the subjective element and not enough on the objective. This is due to a whole list of reasons people have for dismissing stats, math, analytics, and the like.

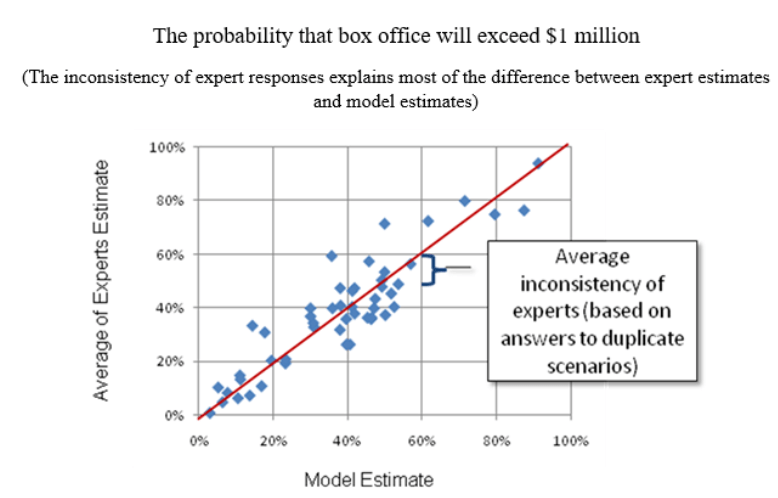

Doug Hubbard ran into this problem years ago when he tapped to do exactly what the Sony executives should’ve done in 1998: create a statistical model that will predict the movie projects most likely to succeed at the box office. He tells the story from his book How to Measure Anything: Finding the Value of “Intangibles” in Business:

The people who are paid to review movie projects are typically ex-producers, and they have a hard time imagining how an equation could outperform their judgment. In one particular conversation, I remember a script reviewer talking about the need for his “holistic” analysis of the entire movie project based on his creative judgment and years of experience. In his words, the work was “too complex for a mathematical model.”

Of course, Doug wasn’t going to leave it at that. He examined the past predictions about box office success for given projects that experts had made, along with how much these projects actually grossed, and he found no correlation between the two. In fact, projections overestimated the performance of a movie at the box office nearly 80% of the time – and underestimated performance only 20% of the time.

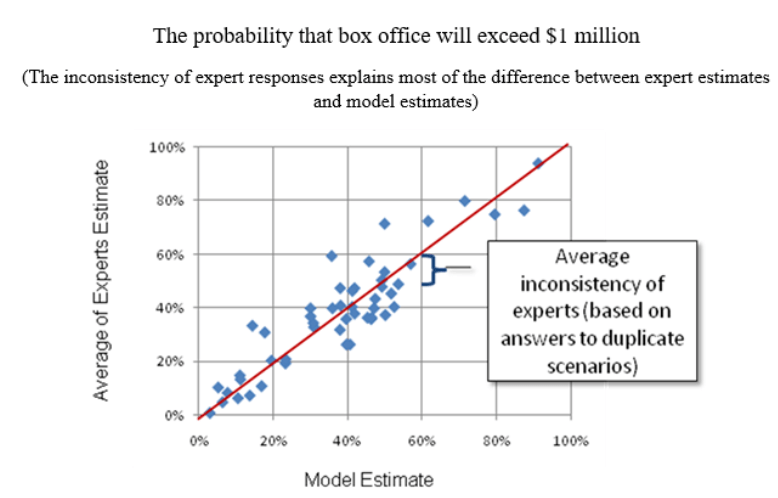

Figure 1 compares expert assessment and a model of expert estimates, using data points from small-budget indie films:

Figure 1: Comparison Between Expert Estimates and the Model Estimate

Figure 1: Comparison Between Expert Estimates and the Model Estimate

As Doug says, “If I had developed a random number generator that produced the same distribution of numbers as historical box office results, I could have predicted outcomes as well as the experts.”

He did, however, gain a few crucial insights from historical data. One was that there was a correlation between the distributor’s marketing budget for a movie and how well the movie performed at the box office. This led him to the final conclusion of his story:

Using a few more variables, we created a model that had a…correlation with actual box office results. This was a huge improvement over the previous track record of the experts.

Was the model a crystal ball that made perfect, or even amazingly-accurate predictions? Of course not. But – and this is the entire point – the model reduced uncertainty in a way that the studio’s current methods could not. The studio in question increased its chances of hitting paydirt with a given project – which, given just how much of a gamble making a movie can be, is immensely valuable.

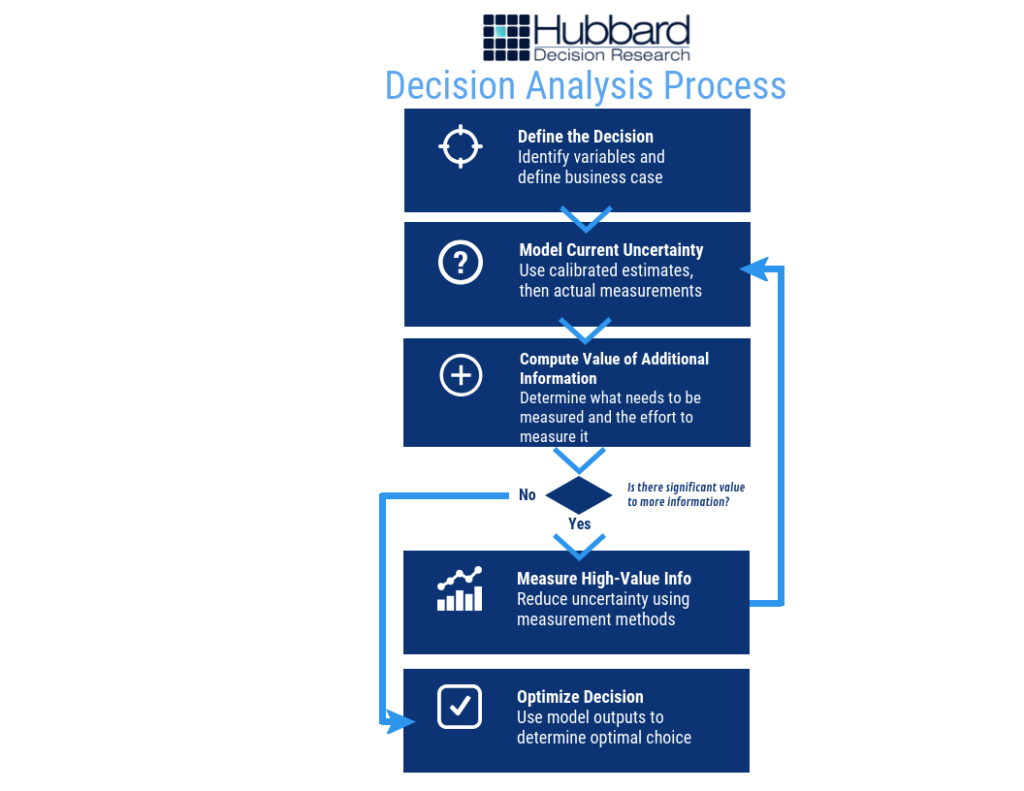

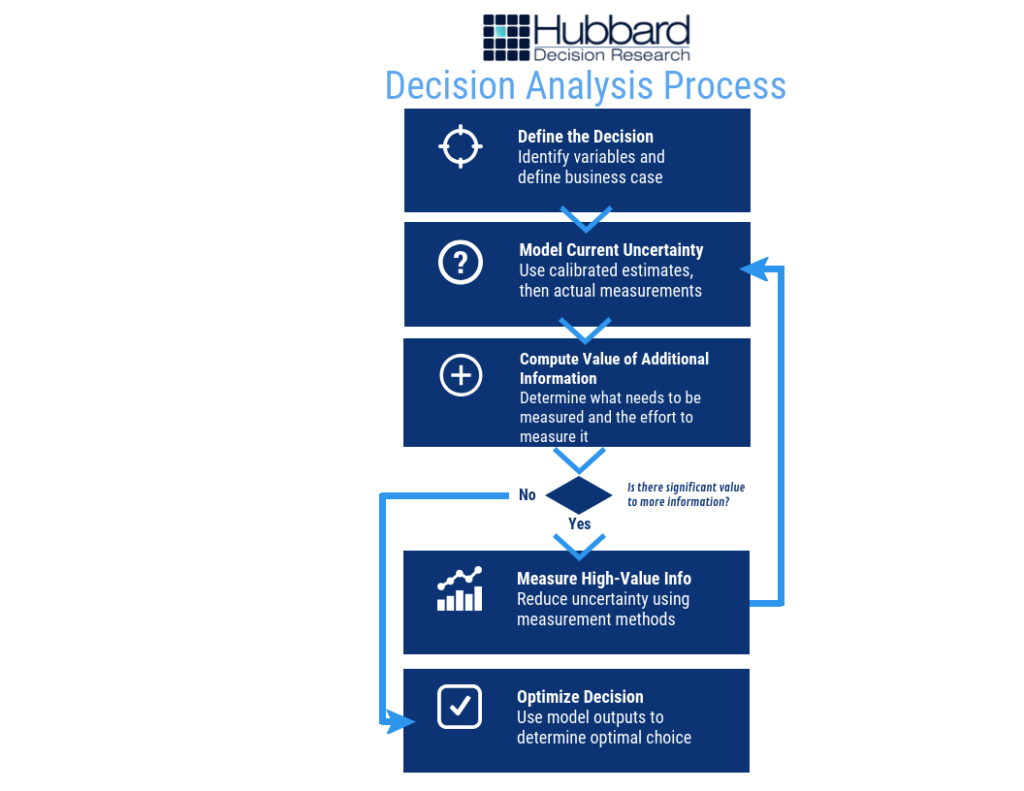

The process for creating a model is less complicated than you might think. If you understand the basic process, as shown below in Figure 2, you have a framework to measure anything:

Figure 2: Decision Analysis Process

Figure 2: Decision Analysis Process

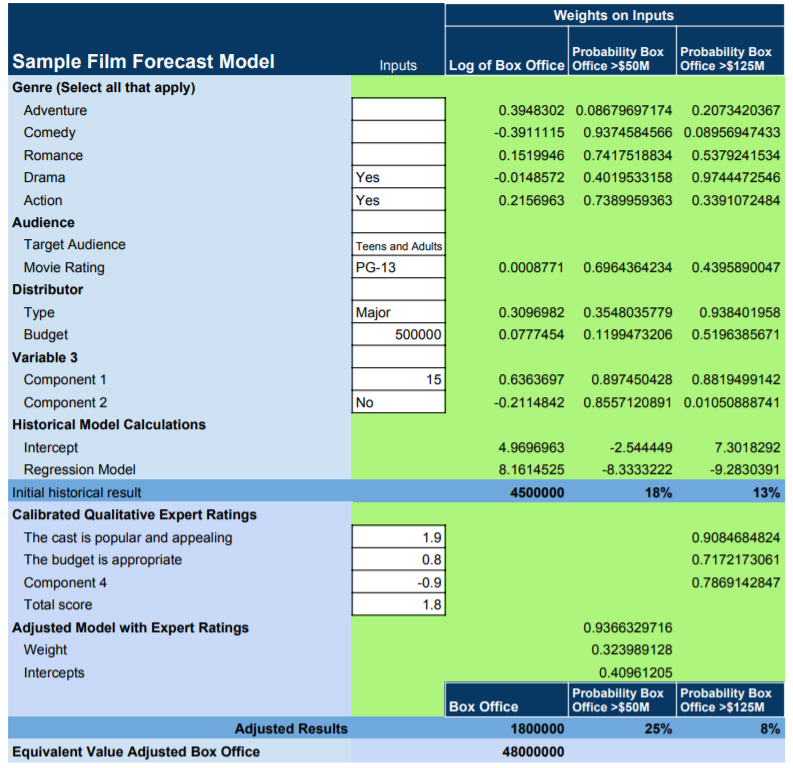

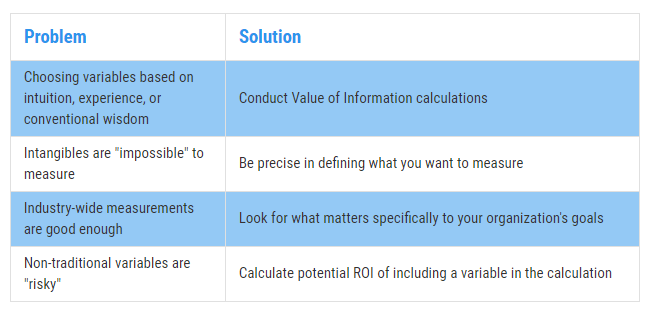

At its core, a model takes variables – anything from distributor budget for movies to, say, technology adoption rate for business projects – and uses calibrated estimates, historical data, and a range of other factors to put values on them. Then, the model applies a variety of statistical methods that have been shown by research and experience to be valid and creates an output that can look like this in Figure 3 (the numbers are just an example) :

Figure 3: Sample Film Forecast Model

Figure 3: Sample Film Forecast Model

What’s the Next Big Hit?

The next big hit – whether it’s a movie, an advertising campaign, a political campaign, or a ground-breaking innovation in business – can be modeled beyond mere guesswork or even expert assessment. The trick – and really, the hard part – is figuring out how to measure the critical intangibles inherent to these abstract concepts. The problem today is that most quantitative models skip them altogether.

But intangibles are important. How much your average fan loves a character, and will spend hard-earned money to go to a movie theatre to see an upcoming film about, say, a biochemist-turned-vampire named Morbius, will ultimately help to determine success. Expressed in that desire – in any desire – are any number of innate human motivations and components of personality that can be measured.

(By the way, the aforementioned Morbius movie is being made by Sony as a part of the Marvel Cinematic Universe. Better late than never, although you don’t need a model to predict Morbius won’t gross as much as Avengers: Endgame, despite how cool the character may be.

In this world, precious few things are certain. But with a little math and a little ingenuity, you can measure anything – and if you can measure it, you can model and forecast it and get a much better idea of what will be the next great idea – and the next big hit.

by Joey Beachum | May 18, 2019 | How To Measure Anything Blogs, News, The Failure of Risk Management Blogs

Risk management isn’t easy.

At any given time, an organization faces many risks, both known and unknown, minor and critical. Due to limited resources, not all risks can be mitigated. Without an effective risk management process, not all risks can even be identified. Thus, a risk manager’s job is to figure out how to best allocate his or her resources to best protect the organization. The only way to do so in an organized fashion is to have a risk management process – but there’s a big kicker: it has to work.

And as we’ve learned from the past three decades, it’s not a given that a process works as well as it needs to. Often, unfortunately, processes just aren’t very effective, and can actually harm more than they help.

When assessing the performance and effectiveness of your risk management process, it helps if you undertake a rigorous, critical examination of the process, starting with one question: How do I know my methods work?

Before you answer, we need to clarify what this means. By “works” we mean a method that measurably reduces error in estimates, and improves average return on portfolios of decisions compared to expert intuition or an alternative method.

Note that this is not the same as merely perceived benefits. If, for example, estimates of project cost overruns are improved, that should be objectively measurable by comparing original estimates to observed outcomes. Merely using a survey to ask managers their opinions about the benefits of a method won’t do.

Why We Can’t Rely on Our Perception

The reason we can’t rely on the mere perception of effectiveness is that we are all susceptible to a kind of “analysis placebo effect.” That is, research shows that we can increase our confidence at a task while not improving or even getting worse.

For example, it has been shown that just using more data or more “rigor”, even when there is no real measurable improvement, has increased confidence – but not accuracy – in estimating the outcomes of law enforcement interrogations, sporting events, and portfolio returns<fn>DePaulo, B. M., Charlton, K., Cooper, H., Lindsay, J.J., Muhlenbruck, L. “The accuracy-confidence correlation in the detection of deception” Personality and Social Psychology Review, 1(4) pp 346-357, 1997)</fn>.

Merely having a system also doesn’t guarantee effectiveness or improvement. In one study in Harvard Business Review, the authors found that an analysis of over 200 popular management tools and processes had a surprising result: “Most of the management tools and techniques we studied had no direct causal relationship to superior business performance.”<fn>Kassin, S.M., Fong, C.T. “I’m innocent!: Effect of training on judgments of truth and deception in the interrogation room” Law and Human Behavior, 23 pp 499-516, 1999)</fn>

Throw in a myriad of reasons why humans are naturally bad at assessing probability and one can see that any risk management system predicated on subjective, uncalibrated human assessment is, by itself, inherently ineffective at best and dangerous at worst.

It makes sense, then, that if your risk management system fits the above (e.g. it has risk matrices, heat maps, and other pseudo-quantitative, subjective “measurement” systems), it may not be working nearly well as you want.

To be sure, you have to be able to measure how well your risk management system is measuring risk.

Measuring How You Make Measurements

So, how can we measure real improvements? Ideally, there would be some big survey been conducted which tracked multiple organizations over a long period of time which showed that some methods are measurably outperforming others. Did 50 companies using one method over a 10-year period actually have fewer big loss events than another 50 companies using another method over the same period? Or were returns on portfolios of investments improved for the first group compared to the second group? Or were events at least predicted better?

Large scale research like that is rare. But there is a lot of research on individual components of methods, if not the entire methodology. Components include the elicitation of inputs, controls for various errors, use of historical data, specific quantitative procedures, and so on. What does the research say about each of the parts of your method? Also, is there research that shows that these components make estimates or outcomes worse?

Let’s look at the most direct answer to how you can measure your improvements: having a quantitative model. Over 60 years ago, psychologist Paul Meehl studied how doctors and other clinicians made predictions in the form of patient prognoses and found something that was, for the time (and still today) very startling: statistical methods were consistently superior to the clinical judgments rendered by medical experts.<fn>N. Nohria, W. Joyce, and B. Roberson, “What Really Works,” Harvard Business Review, July 2003</fn> In 1989, another paper further solidified the notion that quantitative models – in this study, represented by actuarial science – outperform experts<fn>P.E. Meehl, Clinical Versus Statistical Prediction: A Theoretical Analysis and a Review of the Evidence. (Minneapolis, University of Minnesota Press, 1958)</fn>.

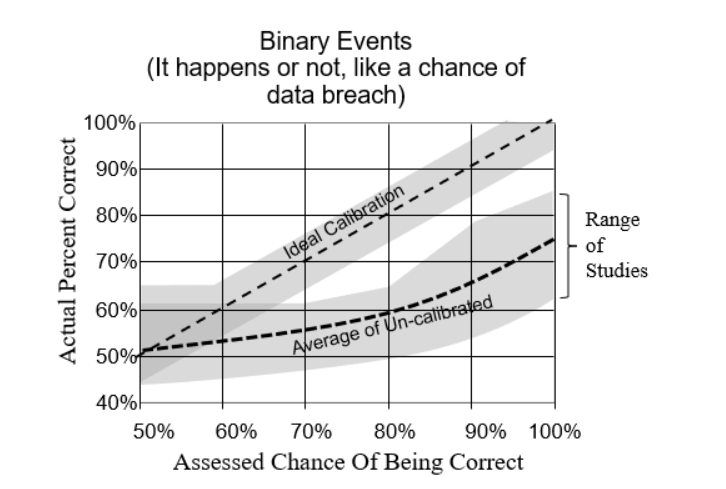

Calibrating experts so they can assess probabilities with more accuracy and (justifiable) confidence has also been shown to measurably improve the performance of a risk management system<fn>R.M. Dawes, D. Faust, and P.E. Meehl, “Clinical Versus Actuarial Judgment,” Science, 243(4899) (1989): 1668-1674</fn>. Calibration corrects for innate human biases and works for about 85% of the population. The results are quantifiable, as evidenced by the image below compiled from calibrating nearly 1,500 individuals over the past 20 years (Figure 1):

Figure 1: Difference Between Calibrated and Uncalibrated Assessments

Other tools, such as Monte Carlo simulations and Bayesian methods, have also been shown to measurably improve the performance of a quantitative model. So, as we mentioned above, even if you don’t have exhaustive data to verify the effectiveness of the model in whole, you can still test the effectiveness of each individual component.

The bottom line: If you can’t quantitatively and scientifically test the performance and validity of your risk management process, then it probably is causing more error – and risk – than it’s reducing.

This research has already been done and the results are conclusive. So, the only other question is why not get started on improvements now?

Figure 1: Real Examples of Measurement Inversion

Figure 1: Real Examples of Measurement Inversion Figure 2: Solving the Measurement Inversion Problem

Figure 2: Solving the Measurement Inversion Problem